Are you ready to meet the demands of tomorrow? Evaluate your approach to skilling.

Architect & Scale End-to-End Streaming Pipelines in the Cloud

12 Weeks. Live Online Classes. Instructor-led.

Our Partners

Operate lake-and-warehouse architectures that stay cost-efficient and observable

Design exactly-once pipelines with Kafka, Flink & Spark Structured Streaming

Serve online features and analytics at sub-second latency—no batch reloads

What you will learn?

Deliver insights in under five seconds. This course takes you from change-data-capture with Debezium and Kafka to stateful stream processing in Apache Flink and Spark Structured Streaming. You’ll land data in a Delta Lakehouse, serve online features for ML, and wrap the entire pipeline with Prometheus observability and Great Expectations data contracts. Perfect for engineers facing IoT, click-stream, or telemetry workloads who need exactly-once guarantees at cloud scale.

-

Use Debezium to mirror operational DB changes to Kafka with exactly-once semantics

Master Kafka partitions, compaction, schema registry & consumer-group design

-

Build high-throughput joins & CEP patterns in Apache Flink and Spark Structured Streaming

Tune checkpoints, state back-ends, and watermarking for sub-second SLAs

-

Land streams in Parquet/Delta; automate schema evolution with Auto Loader

Create Bronze–Silver–Gold tables and enable time-travel querying for reprocessing

-

Feed aggregated features into Feast / Redis for real-time ML inference

Expose low-latency REST & gRPC services; benchmark p95 latency & QPS

-

Instrument pipelines with OpenTelemetry → Prometheus + Grafana dashboards

Enforce data contracts & tests with Great Expectations; integrate alerting to Slack

Right-size clusters and use auto-scaling to cut cost by up to 40 %

-

Team project: telemetry or click-stream pipeline → real-time dashboard & ML feature feed

Deliver architecture diagram, Terraform IaC, and ROI deck to a hiring-manager panel

Who Should Enrol?

-

Modernise batch ETL jobs into real-time, exactly-once pipelines.

-

Integrating Kafka / Flink into micro-services

Embed event-driven data flows and lakehouse zones into micro-service architectures.

-

Feed models with fresh features and support online prediction services

-

Operate high-throughput Kafka/Flink clusters with robust monitoring and auto-scaling.

-

Break into one of the fastest-growing data specialties with a portfolio-ready streaming project.

Professionals who need to stream, process, and serve data in real time—not overnight.

Prerequisites

Solid Python & SQL, basic cloud familiarity. Free Data Infra 101 & Docker-K8s Mini-Camp bridge badges are provided for all Intermediate-track graduates.

Career Pathways

-

Design and maintain high-throughput CDC & streaming pipelines.

-

Build and scale Kafka/Flink/Spark clusters with CI/CD and IaC.

-

Deliver sub-second dashboards and metrics built directly on event streams.

-

Own online/offline feature parity and latency budgets for production models.

-

Define lakehouse tiers, storage formats and cluster topologies for enterprise-wide streaming workloads.

Graduates leave with a production streaming pipeline, GitHub repo, and recruiter-ready stories—aligned with the majority of “Kafka/Flink/Spark Streaming” roles advertised today.

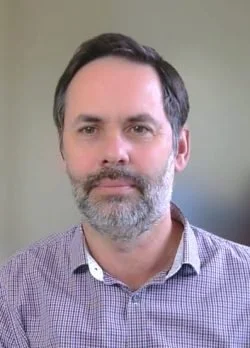

Amir Charkhi

Technology leader | Adjunct Professor | Founder

With 20 + years across energy, mining, finance, and government, Amir turns real-world data problems into production AI. He specialises in MLOps, cloud data engineering, and Python, and now shares that know-how as founder of AI Tech Institute and adjunct professor at UWA, where he designs hands-on courses in machine learning and LLMs.

Advanced: Real-Time Data Platforms

12 Weeks. Live Online Classes.

Student Outcomes

Frequently Asked Questions

-

Beginner courses: none— we start with Python basics.

Intermediate & Advanced: ability to write simple Python scripts and use Git is expected. -

Plan on 8–10 hours: 2× 3-hour live sessions and 2–4 hours of project work. Advanced tracks may require up to 10 hours for capstone milestones.

-

All sessions are recorded and posted within 12 hours. You’ll still have access to Slack/Discord to ask instructors questions.

-

New intakes launch roughly every 8 weeks. Each course page shows the exact start date and the “Apply-by” deadline.

-

Just a laptop with Chrome/Firefox and a stable internet connection. All coding happens in cloud JupyterLab or VS Code Dev Containers—no local installs.

-

Yes. 100 % refund until the end of Week 2—no questions asked. After that, pro-rata refunds apply if you need to withdraw for documented reasons.

-

Absolutely. We issue invoices to companies and offer interest-free 3- or 6-month payment plans.

-

Live Q&A in every session, 24-hour Slack response time from instructors, weekly office-hours, and code reviews on your GitHub pull requests.