Are you ready to meet the demands of tomorrow? Evaluate your approach to skilling.

Build & Govern Generative-AI Products That Enterprises Trust

12 Weeks. Live Online Classes. Instructor-led.

Our Partners

Deploy autoscaling KServe/KEDA endpoints in the cloud—monitor cost & drift

Design safe, compliant LLM systems—bias, privacy & PII guard-rails built-in

Build Retrieval-Augmented Generation (RAG) services with vector databases

What you will learn?

-

Fine-tune and evaluate transformers on Hugging Face

Set up experiment tracking, environment repro with Conda/Poetry

-

Build vector indexes with pgvector or Pinecone

Chain prompts with LangChain; hit <300 ms median latency

-

Measure factuality, toxicity, bias; create automated regression suites

Integrate guard-rail frameworks (Guardrails.ai / Rebuff)

-

Containerise with Docker multi-stage; deploy via KServe & Helm

Autoscale with KEDA; emit Prometheus metrics, Grafana dashboards

-

Add JWT/OAuth, rate limiting, and data-loss-prevention filters

Generate audit logs for SOC-2 / ISO 27001 compliance

-

Team project on real enterprise data (finance, energy, retail)

Deliver a live URL, design doc, and ROI/cost analysis

Unlock the full power of large-language models while meeting enterprise-grade security and compliance. In this 12-week cohort you’ll design and deploy Retrieval-Augmented Generation (RAG) services on Kubernetes, wire in bias- and PII-guard-rails, and build cost dashboards that keep CFOs happy. You’ll graduate with a live KServe endpoint, an MLflow-tracked fine-tune, and a governance playbook—exactly what hiring managers expect from a modern Generative-AI or LLM Engineer.

Who Should Enrol?

Engineers and data professionals who need to ship Gen-AI features to production without compromising safety or cost.

Prerequisites

Solid Python & Git, basic ML/LLM awareness, plus ~8-10 hrs/week for classes & labs.

(Free Deep Learning Core & Docker-K8s Mini-camp bridge badges are provided for all Intermediate-track graduates.)

-

You’re already training models; now you need to deploy Gen-AI services that meet latency, cost and safety SLAs.

-

You want to embed LLM calls, vector search or RAG pipelines into existing apps without turning production into a research lab.

-

Move beyond notebooks to shipping generative models behind robust APIs—with monitoring, A/B guards and rollback plans as well as RAG.

-

Become the go-to operator for GPU workloads, prompt-traffic observability and secure model–data pipelines.

-

Fast-track into one of tech’s hottest niches by learning the end-to-end Gen-AI stack, from fine-tuning to feature flags.

Career Pathways

-

Design, tune and deploy LLM workflows that power chatbots, copilot features and creative-content engines.

-

Build retrieval-augmented generation pipelines that fuse proprietary data with frontier models—safely and at scale.

-

Package and orchestrate models with CI/CD, feature stores, vector DBs and on-call monitoring dashboards.

-

Translate fuzzy product briefs into user-centric Gen-AI features, balancing UX, cost and compliance.

-

Scope end-to-end Gen-AI systems for enterprise clients, from data governance to cloud spend optimisation.

Graduates leave with a live Gen-AI service, a public GitHub repo, a design document, and recruiter-friendly talking points—aligned to >70 % of current “LLM Engineer” job posts.

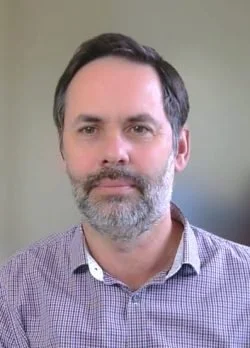

Amir Charkhi

Technology leader | Adjunct Professor | Founder

With 20 + years across energy, mining, finance, and government, Amir turns real-world data problems into production AI. He specialises in MLOps, cloud data engineering, and Python, and now shares that know-how as founder of AI Tech Institute and adjunct professor at UWA, where he designs hands-on courses in machine learning and LLMs.

Advanced: Generative AI Systems & Safety

12 Weeks. Live Online Classes.

Student Outcomes

Frequently Asked Questions

-

Beginner courses: none— we start with Python basics.

Intermediate & Advanced: ability to write simple Python scripts and use Git is expected. -

Plan on 8–10 hours: 2× 3-hour live sessions and 2–4 hours of project work. Advanced tracks may require up to 10 hours for capstone milestones.

-

All sessions are recorded and posted within 12 hours. You’ll still have access to Slack/Discord to ask instructors questions.

-

New intakes launch roughly every 8 weeks. Each course page shows the exact start date and the “Apply-by” deadline.

-

Just a laptop with Chrome/Firefox and a stable internet connection. All coding happens in cloud JupyterLab or VS Code Dev Containers—no local installs.

-

Yes. 100 % refund until the end of Week 2—no questions asked. After that, pro-rata refunds apply if you need to withdraw for documented reasons.

-

Absolutely. We issue invoices to companies and offer interest-free 3- or 6-month payment plans.

-

Live Q&A in every session, 24-hour Slack response time from instructors, weekly office-hours, and code reviews on your GitHub pull requests.