Are you ready to meet the demands of tomorrow? Evaluate your approach to skilling.

Engineer Cloud-Scale Data Pieplines

12 Weeks. Live Online Classes.

Accepting EOIs

Our Partners

Deploy real-time data systems

Design robust ETL & ELT pipelines

Manage distributed data processing

What you will learn?

-

• Write analytic queries with CTEs, window functions, and time-series tricks that power real dashboards.

• Model data in star and data-vault schemas; refactor legacy ETL into ELT using dbt and version-controlled SQL.

• Set up development, staging, and prod environments in Snowflake / BigQuery with Terraform for IaC. -

• Compare Redshift, BigQuery, Snowflake, and Azure Synapse—choose the right engine for volume, velocity, and cost.

• Build a lakehouse on S3 / ADLS / GCS with open formats (Parquet, Delta).

• Automate partitioning, clustering, and life-cycle policies to cut storage bills by up to 40 %. -

• Spin up Databricks notebooks, optimise Spark jobs, and tune shuffle-heavy pipelines.

• Use PySpark, Delta Live Tables, and Auto Loader to ingest billions of rows reliably.

• Benchmark against Apache Beam on Dataflow for portability decisions. -

• Design exactly-once pipelines with Apache Kafka, Kafka Connect, and ksqlDB.

• Build real-time ETL with Spark Structured Streaming and windowed aggregations.

• Implement change-data-capture (CDC) from PostgreSQL/MySQL into the warehouse within 5-second latency. -

• Orchestrate batch + streaming jobs with Apache Airflow DAGs, sensors, and SLAs.

• Enforce data contracts & quality tests via Great Expectations.

• Track end-to-end lineage in OpenLineage and surface “blast radius” for schema changes. -

• Team up on a real client challenge (IoT telemetry, retail click-stream, or renewable-asset SCADA).

• Deliver a production-grade pipeline: ingestion → transformation → BI dashboard on a live URL.

• Present architecture diagram, cost breakdown, and scaling plan to a panel of hiring managers.

Engineer cloud-scale pipelines that keep analytics teams running. Starting with modern ELT in dbt and star/ vault schemas, you’ll master Snowflake, BigQuery and Delta Lake storage, optimise Spark and Databricks for terabyte workloads, and build exactly-once streaming with Kafka and ksqlDB. The capstone delivers a production-grade pipeline plus lineage, cost dashboards and Terraform IaC—proof you’re ready for Data or Analytics Engineering roles in AWS, Azure or GCP environments.

Who Should Enrol?

Tech professionals who can write basic Python and SQL, and are now ready to build cloud-scale, production-grade data pipelines.

Prerequisites

Completion of our Python & Git Kick-start and Data & SQL Essentials (or equivalent), plus 8-10 hrs/week for live sessions, labs, and project work.

-

Seeking to move closer to data infrastructure.

-

Who want to own the pipes not just the dashboards.

-

Tasked with modernising ETL and embracing DataOps.

-

Targeting their first Data Engineering or Analytics Engineering role.

Career Pathways

Graduates leave with a portfolio, GitHub repo, and recruiter-friendly talking points aligned to entry-level requisitions

-

Architect and maintain ELT/ELT pipelines, optimise warehouse cost/performance, ensure data freshness SLAs.

-

Transform raw tables into analytics-ready marts using dbt, enforce data quality tests, partner with BI teams for self-service reporting.

-

Build streaming applications on Kafka/Flink/Spark, implement CDC, and design alerting for low-latency systems.

-

Select storage & processing engines, write IaC, design security & IAM policies across AWS, Azure, or GCP.

-

Automate orchestration with Airflow/Dagster, monitor pipelines, manage CI/CD for data code, and uphold lineage & governance.

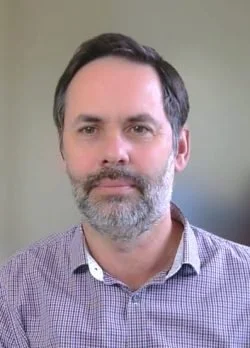

Amir Charkhi

Technology leader | Adjunct Professor | Founder

With 20 + years across energy, mining, finance, and government, Amir turns real-world data problems into production AI. He specialises in MLOps, cloud data engineering, and Python, and now shares that know-how as founder of AI Tech Institute and adjunct professor at UWA, where he designs hands-on courses in machine learning and LLMs.

Intermeidate: Data Engineering Course

12 Weeks. Live Online Classes.

Student Outcomes

Frequently Asked Questions

-

Beginner courses: none— we start with Python basics.

Intermediate & Advanced: ability to write simple Python scripts and use Git is expected. -

Plan on 8–10 hours: 2× 3-hour live sessions and 2–4 hours of project work. Advanced tracks may require up to 10 hours for capstone milestones.

-

All sessions are recorded and posted within 12 hours. You’ll still have access to Slack/Discord to ask instructors questions.

-

New intakes launch roughly every 8 weeks. Each course page shows the exact start date and the “Apply-by” deadline.

-

Just a laptop with Chrome/Firefox and a stable internet connection. All coding happens in cloud JupyterLab or VS Code Dev Containers—no local installs.

-

Yes. 100 % refund until the end of Week 2—no questions asked. After that, pro-rata refunds apply if you need to withdraw for documented reasons.

-

Absolutely. We issue invoices to companies and offer interest-free 3- or 6-month payment plans.

-

Live Q&A in every session, 24-hour Slack response time from instructors, weekly office-hours, and code reviews on your GitHub pull requests.