Are you ready to meet the demands of tomorrow? Evaluate your approach to skilling.

Transform Unstructured Text Into Instant, Accurate Answers

12 Weeks. Live Online Classes. Instructor-led.

Our Partners

Ship multilingual NLP systems with monitoring, guard-rails, and governance baked in

Craft vector & hybrid search pipelines using pgvector, Pinecone, and Elasticsearch

Engineer Retrieval-Augmented Generation (RAG) services that ground LLMs in trusted data

What you will learn?

-

Generate sentence-level embeddings with OpenAI, Cohere & HF models

Design efficient HNSW/IVF indexes in pgvector, Pinecone, or Milvus

Tune distance metrics, index parameters, and recall/latency trade-offs

-

Combine dense (vector) and sparse (BM25) scores in Elasticsearch hybrid search

Train lightGBM / XGBoost learning-to-rank models on click-through logs

Evaluate NDCG, MRR, and success@k; instrument A/B experiments

-

Chain retriever → re-ranker → LLM answerer with LangChain or LlamaIndex

Apply chunking, metadata filters, and Max Marginal Relevance (MMR) to boost relevancy

Expose gRPC/REST API; benchmark answers for factual grounding & latency

-

Fine-tune models (e.g., MiniLM, Mistral-7B) on domain-specific corpora

Integrate language detection and neural MT for cross-lingual retrieval

Implement transfer-learning tricks to keep GPU bills low

-

Build automatic hallucination & toxicity tests with Promptfoo / Rebuff

Stream metrics to Prometheus; create Grafana dashboards for drift & latency

Produce governance docs for GDPR, privacy, and data provenance

-

Team POC: policy-search, knowledge-base Q&A, or legal-doc RAG system

Deliver architecture diagrams, cost model, and live demo to hiring-manager panel

Turn terabytes of documents into instant, trustworthy answers. You’ll master embeddings, hybrid BM25 + vector ranking, and build multilingual RAG pipelines with pgvector, Pinecone, LangChain and OpenAI. Automated hallucination tests, governance dashboards, and Prometheus monitoring ensure your search or Q&A service stays accurate, compliant, and fast. Ideal for engineers modernising legacy Lucene stacks or delivering AI-powered knowledge bases in finance, legal, or energy sectors.

Who Should Enrol?

-

Upgrade from TF-IDF and keyword scoring to dense-vector retrieval, hybrid rankers and RAG pipelines that beat commercial SaaS search.

-

Add text‐heavy use-cases (chatbots, doc-QA, semantic search) to your model portfolio and learn to serve them with latency and cost SLAs.

-

Move beyond analytics to production-grade NLP: embeddings, transformers, evaluation suites and online A/B relevance tests.

-

Embed vector databases, similarity APIs and streaming ingestion into existing micro-service architectures—without breaking auth or billing.

-

Understand how modern retrieval works so you can scope, prioritise and govern enterprise search projects with vendors or in-house teams.

Professionals who must turn mountains of text into search and Q&A experiences users trust.

Prerequisites

Solid Python & Git, basic ML knowledge. Free Deep Learning Core and Docker-K8s Mini-Camp bridge badges are included for all Intermediate-track graduates.

Career Pathways

-

Build and optimise dense-vector, hybrid and RAG search stacks that power chat, FAQ and knowledge-base experiences.

-

Design offline metrics and online A/B tests, fine-tune LLM rankers and iterate on relevance signals at scale.

-

Integrate embedding services, vector DBs, orchestration frameworks and observability into cloud-native platforms.

-

Scope end-to-end IR solutions for large document corpora, balancing latency, accuracy and cost.

-

Translate business questions into scalable NLP/search architectures and roadmaps that engineering teams can execute.

Graduates leave with a live enterprise search stack (vector DB + RAG API), a publish-ready design doc, and recruiter-ready stories that map to the majority of “Search/NLP Engineer” roles on today’s job boards.

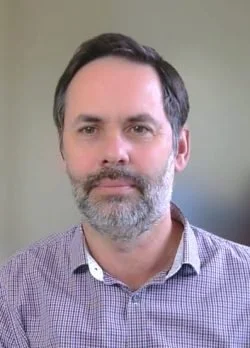

Amir Charkhi

Technology leader | Adjunct Professor | Founder

With 20 + years across energy, mining, finance, and government, Amir turns real-world data problems into production AI. He specialises in MLOps, cloud data engineering, and Python, and now shares that know-how as founder of AI Tech Institute and adjunct professor at UWA, where he designs hands-on courses in machine learning and LLMs.

Advanced: Enterprise NLP & Search

12 Weeks. Live Online Classes.

Student Outcomes

Frequently Asked Questions

-

Beginner courses: none— we start with Python basics.

Intermediate & Advanced: ability to write simple Python scripts and use Git is expected. -

Plan on 8–10 hours: 2× 3-hour live sessions and 2–4 hours of project work. Advanced tracks may require up to 10 hours for capstone milestones.

-

All sessions are recorded and posted within 12 hours. You’ll still have access to Slack/Discord to ask instructors questions.

-

New intakes launch roughly every 8 weeks. Each course page shows the exact start date and the “Apply-by” deadline.

-

Just a laptop with Chrome/Firefox and a stable internet connection. All coding happens in cloud JupyterLab or VS Code Dev Containers—no local installs.

-

Yes. 100 % refund until the end of Week 2—no questions asked. After that, pro-rata refunds apply if you need to withdraw for documented reasons.

-

Absolutely. We issue invoices to companies and offer interest-free 3- or 6-month payment plans.

-

Live Q&A in every session, 24-hour Slack response time from instructors, weekly office-hours, and code reviews on your GitHub pull requests.